Applied Scientist

Wayve

About me

Hey! My name is Felix and I am currently an Applied Scientist at Wayve working on efficient ML and foundational models for autonomous driving.

Previously, I was a Principal Engineer at Medtronic within the Surgical Robotics AI Centre of Excellence. I was the AI tech lead for the development of a real-time intraoperative surgical guidance model. I also led and conducted fundamental AI research for real-time AI models across efficient ML, long-context modelling, temporal multi-task learning and video instance segmentation. Before Medtronic, I was in the AI Research Team at Babylon Health where I was researching methods across GNNs and language models for learning patient representations from electronic health records.

I was a post-doc at UCL working with M. Jorge Cardoso where I developed fundamental methods in deep learning and multi-task learning. I developed multiple learning schemes for multi-task learning, with oral presentations at MICCAI 2018 and ICCV 2019.

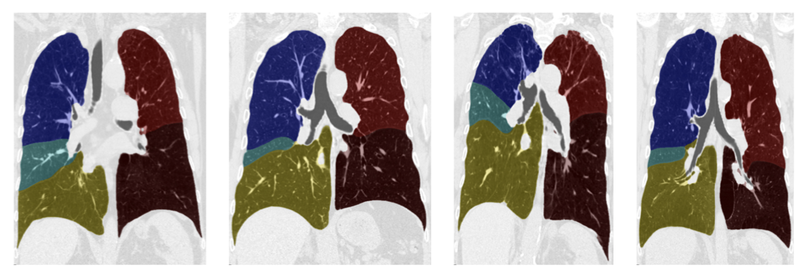

I initially was a PhD student under the supervision of Prof. David Hawkes at CMIC UCL and Prof. John Hurst at the Royal Free Hospital. I developed numerous algorithms for the quantitative analysis of Chronic Obstructive Pulmonary Disease (COPD) from three-dimensional Computed Tomography scans using supervised and unsupervised learning methods.

- Representation Learning

- Multi-task Learning

- Modular/Mixture of Expert Models

- Efficient ML

PhD in Medical Computer Vision, 2017

University College London

MSc in Biomedical Engineering, 2012

University of Oxford

BEng in Mechanical Engineering, 2011

University College London

Selected Publications

For a complete list, please visit my Google Scholar webpage

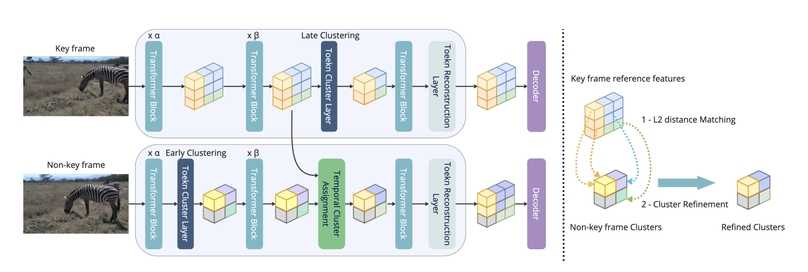

Vision Transformers, especially Swin, are strong backbones for video segmentation but remain computationally heavy, limiting real-time use. Conventional token pruning struggles with Swin’s fixed window scheme, while existing clustering methods ignore temporal redundancy. To address this, the proposed Temporal Cluster Assignment (TCA) leverages temporal coherence to refine token clusters without fine-tuning, improving efficiency and accuracy across multiple video benchmarks, including surgical data.

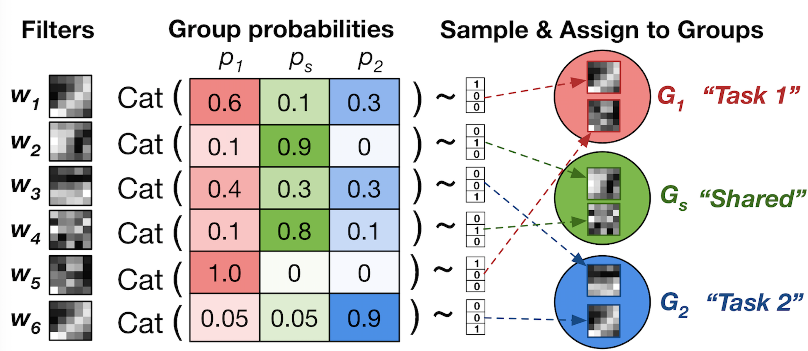

In this paper, we present a probabilistic approach to learning task-specific and shared representations in CNNs for multi-task learning. We propose stochastic filter groups (SFG), a mechanism to assign convolution kernels in each layer to specialist or generalist groups, which are specific to or shared across different tasks, respectively. The SFG modules determine the connectivity between layers and the structures of task-specific and shared representations in the network. We employ variational inference to learn the posterior distribution over the possible grouping of kernels and network parameters.

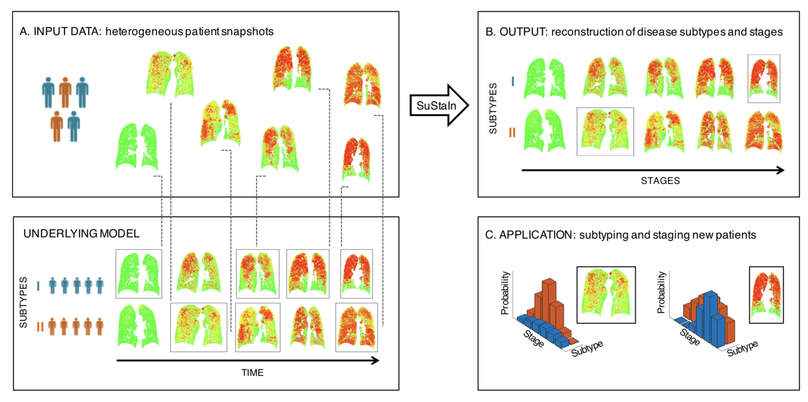

We identified subtypes of patients with COPD with distinct longitudinal progression patterns using a novel machine-learning tool called Subtype and Stage Inference (SuStaIn) and evaluated the potential for unsupervised diseased progression modelling for patient stratification in COPD. We demonstrated two distinct patterns of disease progression in COPD using SuStaIn, likely representing different endotypes. One third of healthy smokers have detectable imaging changes, suggesting a new biomarker of early COPD.

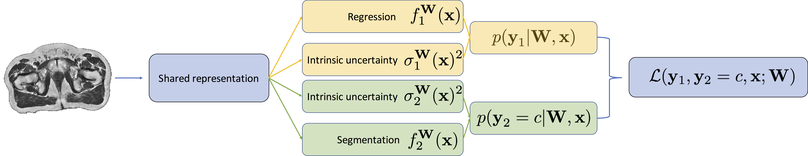

We propose a probabilistic multi-task network that estimates: 1) intrinsic uncertainty through a heteroscedastic noise model for spatially-adaptive task loss weighting and 2) parameter uncertainty through approximate Bayesian inference. This allows sampling of multiple segmentations and synCTs that share their network representation.

A fully automated, unsupervised lobe segmentation algorithm is presented based on a probabilistic segmentation of the fissures and the simultaneous construction of a population model of the fissures. A two-class probabilistic segmentation segments the lung into candidate fissure voxels and the surrounding parenchyma. This was combined with anatomical information and a groupwise fissure prior to drive non-parametric surface fitting to obtain the final segmentation.